Firefox has been outperforming IE in every department for years, and version 3 is speedier than ever.

But tweak the right settings and you could make it faster still, more than doubling your speed in some situations, all for about five minutes work and for the cost of precisely nothing at all. Here's what you need to do.

1. Enable pipelining

Browsers are normally very polite, sending a request to a server then waiting for a response before continuing. Pipelining is a more aggressive technique that lets them send multiple requests before any responses are received, often reducing page download times. To enable it, type about:config in the address bar, double-click network.http.pipelining and network.http.proxy.pipelining so their values are set to true, then double-click network.http.pipelining.maxrequests and set this to 8.

Keep in mind that some servers don't support pipelining, though, and if you regularly visit a lot of these then the tweak can actually reduce performance. Set network.http.pipelining and network.http.proxy.pipelining to false again if you have any problems.

2. Render quickly

Large, complex web pages can take a while to download. Firefox doesn't want to keep you waiting, so by default will display what it's received so far every 0.12 seconds (the "content notify interval"). While this helps the browser feel snappy, frequent redraws increase the total page load time, so a longer content notify interval will improve performance.

Type about:config and press [Enter], then right-click (Apple users ctrl-click) somewhere in the window and select New > Integer. Type content.notify.interval as your preference name, click OK, enter 500000 (that's five hundred thousand, not fifty thousand) and click OK again.

Right-click again in the window and select New > Boolean. This time create a value called content.notify.ontimer and set it to True to finish the job.

3. Faster loading

If you haven't moved your mouse or touched the keyboard for 0.75 seconds (the content switch threshold) then Firefox enters a low frequency interrupt mode, which means its interface becomes less responsive but your page loads more quickly. Reducing the content switch threshold can improve performance, then, and it only takes a moment.

Type about:config and press [Enter], right-click in the window and select New > Integer. Type content.switch.threshold, click OK, enter 250000 (a quarter of a second) and click OK to finish.

4. No interruptions

You can take the last step even further by telling Firefox to ignore user interface events altogether until the current page has been downloaded. This is a little drastic as Firefox could remain unresponsive for quite some time, but try this and see how it works for you.

Type about:config, press [Enter], right-click in the window and select New > Boolean. Type content.interrupt.parsing, click OK, set the value to False and click OK.

5. Block Flash

Intrusive Flash animations are everywhere, popping up over the content you actually want to read and slowing down your browsing. Fortunately there's a very easy solution. Install the Flashblock extension (flashblock.mozdev.org) and it'll block all Flash applets from loading, so web pages will display much more quickly. And if you discover some Flash content that isn't entirely useless, just click its placeholder to download and view the applet as normal.

6. Increase the cache size

As you browse the web so Firefox stores site images and scripts in a local memory cache, where they can be speedily retrieved if you revisit the same page. If you have plenty of RAM (2 GB of more), leave Firefox running all the time and regularly return to pages then you can improve performance by increasing this cache size. Type about:config and press [Enter], then right-click anywhere in the window and select New > Integer. Type browser.cache.memory.capacity, click OK, enter 65536 and click OK, then restart your browser to get the new, larger cache.

7. Enable TraceMonkey

TraceMonkey is a new Firefox feature that converts slow Javascript into super-speedy x86 code, and so lets it run some functions anything up to 20 times faster than the current version. It's still buggy so isn't available in the regular Firefox download yet, but if you're willing to risk the odd crash or two then there's an easy way to try it out.

Install the latest nightly build (ftp://ftp.mozilla.org/pub/firefox/nightly/latest-trunk/), launch it, type about:config in the address bar and press Enter. Type JIT in the filter box, then double-click javascript.options.jit.chrome and javascript.options.jit.content to change their values to true, and that's it - you're running the fastest Firefox Javascript engine ever.

8. Compress data

If you've a slow internet connection then it may feel like you'll never get Firefox to perform properly, but that's not necessarily true. Install toonel.net (toonel.net) and this clever Java applet will re-route your web traffic through its own server, compressing it at the same time, so there's much less to download. And it can even compress JPEGs by allowing you to reduce their quality. This all helps to cut your data transfer, useful if you're on a limited 1 GB-per-month account, and can at best double your browsing performance.

In mid-2007, after a battle with copyright group SABAM, a court in Belgium

In mid-2007, after a battle with copyright group SABAM, a court in Belgium  It was at this point J.S. and her parents filed a lawsuit against the school district, the school, the superintendent, and McGonigle. The attorneys for J.S. argued that the school violated her First Amendment rights to free speech and that the Constitution prohibits the school district from disciplining a student's out-of-school conduct that does not cause a disruption of classes or school administration. As an example, they presented the US Supreme Court case of Tinker v. Des Moines from 1969, which ruled that students do not shed their constitutional rights to free speech when they enter the schoolhouse.

It was at this point J.S. and her parents filed a lawsuit against the school district, the school, the superintendent, and McGonigle. The attorneys for J.S. argued that the school violated her First Amendment rights to free speech and that the Constitution prohibits the school district from disciplining a student's out-of-school conduct that does not cause a disruption of classes or school administration. As an example, they presented the US Supreme Court case of Tinker v. Des Moines from 1969, which ruled that students do not shed their constitutional rights to free speech when they enter the schoolhouse.  Ignorance of the law may be no defense against being held liable for infringement, but it can put a serious cap on damages. Under the Copyright Act, infringement is normally punishable by fines of up to $750 to $30,000 per act, and the upper limit can be raised to $150,000 if the infringement is deemed malicious. But for cases of innocent infringement, the judge can reduce the damages below the $750 floor. In the case of Maverick v. Harper, the judge told the record labels they could accept damages of $200 per song or have a jury decide what the total damages should be; the RIAA has chosen a jury trial over the damages.

Ignorance of the law may be no defense against being held liable for infringement, but it can put a serious cap on damages. Under the Copyright Act, infringement is normally punishable by fines of up to $750 to $30,000 per act, and the upper limit can be raised to $150,000 if the infringement is deemed malicious. But for cases of innocent infringement, the judge can reduce the damages below the $750 floor. In the case of Maverick v. Harper, the judge told the record labels they could accept damages of $200 per song or have a jury decide what the total damages should be; the RIAA has chosen a jury trial over the damages.

Steve Demeter developed the iPhone puzzle game

Steve Demeter developed the iPhone puzzle game

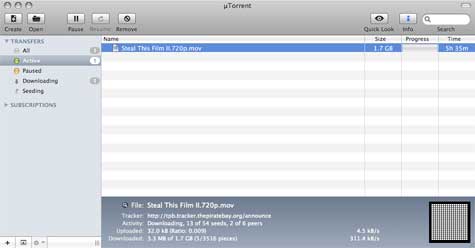

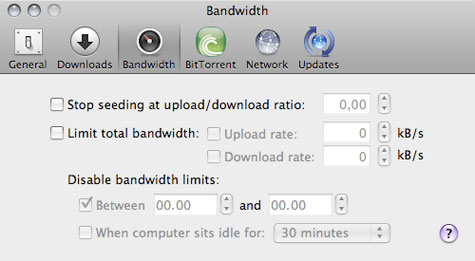

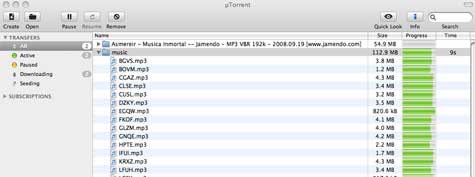

Thus far, only Windows users have had the pleasure of running uTorrent. The client saw its first public release in September 2005, and soon became the most widely used BitTorrent application. In 2006, uTorrent was

Thus far, only Windows users have had the pleasure of running uTorrent. The client saw its first public release in September 2005, and soon became the most widely used BitTorrent application. In 2006, uTorrent was