I want to start out by saying, I LOVE Google Chrome. Recently I went away from Flock and made Chrome my primary browswer. Going out and saying it’s the “best browser I’ve ever used” would be a bit much, but it’s definitely in the top teir. This article isn’t about how great Chrome is, though.

I want to start out by saying, I LOVE Google Chrome. Recently I went away from Flock and made Chrome my primary browswer. Going out and saying it’s the “best browser I’ve ever used” would be a bit much, but it’s definitely in the top teir. This article isn’t about how great Chrome is, though.

A little while ago, Google announced the removal of the “Beta” tag from their brand new browser. Now, I have heard speculation that this is due to the fact that computer manufacturers won’t bundle software with “Beta” hanging around its neck. Normally Google applications stay in Beta for a very long time (see: Gmail), but after only a few months, Chrome is out in the wild with no reservations.

While this may be more of a marketing stunt than anything else, a “Gold” version of Chrome (that’s ironic!) must now assume the same responsibilities of the other browsers that have taken off their training wheels. Note that in this list I’m excluding most (but not all) of the things Google has explicitly promised to fix. These are in NO particular order.

1. More Extensive Options

To be honest, Chrome has fewer options than most of the freeware I review regularly. Essentially, Google has eliminated all but those options that are absolutely necessary, probably in order to see if any of the common options are actually unnecessary. Here are the areas I’d like to see enhanced or simply restored:

- Allow users to set preferred media associations. In other words, restore the functionality of the “Applications” tab in Firefox options which allows the user to choose which plug-ins or programs handle certain types of media.

- Add in options for better tab management (discussed later in this article).

- Basic history controls (like how long to keep user history) would be nice. All the history controls have been made separate in the form of a “History Tab,” but it’s been oversimplified.

I will note that Chrome made it very easy for me to compare Firefox’s options to its own, because the Chrome options box does not disable use of the main browser window. Definitely a nice touch.

2. Compatability Mode

For a very long time, Firefox was plagued by websites that would only allow Internet Explorer (IE) usage. While this is still an issue, many secure or proprietary websites have begun to develop their sites so that they work with Firefox too.

Chrome is, in this sense, the new Firefox. There are already a large number of users who are running Chrome as their primary browser (and more will follow if Google begins making deals to bundle it into new PCs), but there is no easy way to work around browser incompatability.

Chrome is, in this sense, the new Firefox. There are already a large number of users who are running Chrome as their primary browser (and more will follow if Google begins making deals to bundle it into new PCs), but there is no easy way to work around browser incompatability.

Firefox users fixed incompatability by emulating IE with an extension called IE Tab. Essentually, IE Tab ran Internet Explorer inside of Firefox though a function called “Chrome” (no relation to the subject of today’s article, as far as I’m aware). While this worked for almost every instance, it seems that a superior solution could be built into Chrome (the browser) itself.

Why not give users the option to render the page using either their installed copies of IE or Firefox, in addition to Chrome? For the less experienced users who choose Chrome for its simplicity, this will remove one more headache whenever they try to access arcanely designed websites. This is the kind of thing my mom needs, even though she might not understand why.

3. Please Fix Flash!

Seriously! Flash is one of the most pervasive web components out there. While several browsers seem to be having problems with Flash at the moment, my experience with Chrome has been the worst. Separating the plug-in from the browser’s primary process is quite a blessing sometimes, but it also crashes all the time. When Flash crashes on Chrome it kills all the Flash elements on every page. It can be very aggravating to lose idle Flash games or buffering videos.

Seriously! Flash is one of the most pervasive web components out there. While several browsers seem to be having problems with Flash at the moment, my experience with Chrome has been the worst. Separating the plug-in from the browser’s primary process is quite a blessing sometimes, but it also crashes all the time. When Flash crashes on Chrome it kills all the Flash elements on every page. It can be very aggravating to lose idle Flash games or buffering videos.

There are some proposed fixes out there, but most of them are of the “uninstall, reinstall” kind and none have worked for me so far.

4. Better Tab Management

If you’re at all like me, you have no less than 15 tabs open at any one time. This is because there are just so many gosh-darn cool things on the internet and tabs are a great way to store all the things you want to read later. Unfortunately, keeping tabs open requires memory (especially if they have a lot of Flash or Java elements), so it’s terrible for your performance. Chrome has done an excellent job of minimizing RAM leaks and separating each tab into its own process. If one tab freezes, the others will survive.

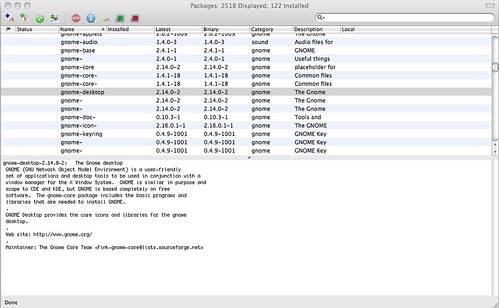

My grievance with Chrome’s tabs is the layout. While I love how the tabs ARE the the browser’s upper boundary, the interface breaks when too many tabs are open at once. The above image was taken with only 17 tabs open. If as many as 30 are opened simultaneously, the icons dissappear altogether and each tab is identical to the others. A simple solution to this would be to allow some of the tabs to fall off the edge of the screen and be accessable through an arrow button or mouse scrolling (as is done with Firefox). This might break Google’s aesthetic, but I’d be happier as a user.

5. Extension Support

Google has promised in the already mentioned blog post that an extension platform is on its way. Awesome. I have just three requests for Google:

Google has promised in the already mentioned blog post that an extension platform is on its way. Awesome. I have just three requests for Google:

- Call them “Extensions” - not Add-ons, Plug-ins, Widgets, or Tools.

- Cater to the people who are already making high quality extensions for Firefox - make it easy for them to port their projects over to Chrome.

- Take extensions to the next level. Whether it’s a great developer pack or better integration with Google Docs, I feel like there is still new ground to plow in this field.

6. Built-in RSS Support

Almost all browsers now offer some kind of auto-detection for a site’s RSS feeds. Flock did the best job in my opinion. For some reason, Chrome has NO features related to RSS.

Image from Vox Daily

What needs to happen is for Chrome to “notice” whenever a site has a general RSS feed and notify the user, either with a distinct icon or a simple option to open that feed. If they wanted to include Google Reader integration, I wouldn’t complain.

7. Little Things and Beta Bugginess

Aside from these major topics, there are quite a few “little things” that have been bothering me in the last month or so. Some of these are things I really liked about Flock/Firefox and wish they were also in Chrome. Some are bothersome things that can be chalked up to the fact that this is still basically Beta software, no matter what Google calls it.

- Switching from tab to tab has been slow for me. Often the tab I switch to is blank for quite some time before the page loads (this is after the page has already loaded previously).

- There is no “View image properties” option in the right-click contextual menu. There is an “Inspect element” option, but it doesn’t really serve the same purpose as well.

- An extension called CoLT allows you to choose between copying the text of a link, the location (or URL) of a link, or both in a specific format. This is perfect for transporting inserting links into blogs, microblogs, and emails. I’d love to see this added natively to Chrome.

- I’d love to see spullsheck spellcheck built-in.

Got Chrome gripes of your own? Post your own issues in the comments.

Developers of Trillr, a microblogging project similar to Twitter,

Developers of Trillr, a microblogging project similar to Twitter,  The Gates Foundation's grant comes at a crucial time when libraries across the US are reporting spikes in patron traffic due to the economic crisis. Students, the unemployed, and those without home Internet access are increasingly making use of the fact that local libraries double as free Internet cafes. In fact, a recent 2007-2008 study by the

The Gates Foundation's grant comes at a crucial time when libraries across the US are reporting spikes in patron traffic due to the economic crisis. Students, the unemployed, and those without home Internet access are increasingly making use of the fact that local libraries double as free Internet cafes. In fact, a recent 2007-2008 study by the  Matt Rosoff is an analyst with

Matt Rosoff is an analyst with

![[Jeremy Liew]](http://s.wsj.net/public/resources/images/OB-CU247_renoco_NS_20081209092455.gif)

Perhaps Digg really is the future of the news business. The headline-discussion site, once an icon of the Web 2.0 movement, is losing millions of dollars a year.

Perhaps Digg really is the future of the news business. The headline-discussion site, once an icon of the Web 2.0 movement, is losing millions of dollars a year. Ironically, the decision by the RIAA to

Ironically, the decision by the RIAA to

All around the world, people who pre-release media onto the Internet face the prospect of harsh treatment if caught. The crew at

All around the world, people who pre-release media onto the Internet face the prospect of harsh treatment if caught. The crew at

)

)

If you own an iPod, you will know that other than storing music, you can also use it as an external hard disk. You simply connect your iPod to your computer and you can easily transfer files over via drag and drop. When it comes to the iPhone however, Apple sells you a larger hard disk, gives you more functionality, yet does not allow you to use it as an external hard disk. Come to think of it, the iPhone is the gadget that you are more likely to carry with you wherever you go and it is the more likely candidate to become a portable hard disk, yet you can’t do anything to it.

If you own an iPod, you will know that other than storing music, you can also use it as an external hard disk. You simply connect your iPod to your computer and you can easily transfer files over via drag and drop. When it comes to the iPhone however, Apple sells you a larger hard disk, gives you more functionality, yet does not allow you to use it as an external hard disk. Come to think of it, the iPhone is the gadget that you are more likely to carry with you wherever you go and it is the more likely candidate to become a portable hard disk, yet you can’t do anything to it. Discover is the only

Discover is the only  Briefcase Lite is the free alternative to the paid app Briefcase.

Briefcase Lite is the free alternative to the paid app Briefcase.